News

NEW! (August 2025) We are hiring a Senior Research Scientist in my team at BAM.

MorePublications [Google Scholar]

Greenback Bears and Fiscal Hawks: Finance is a Jungle and Text Embeddings Must Adapt

Peter Anderson, Mano Vikash Janardhanan, Jason He, Wei Cheng, Charlie Flanagan

In Conference on Empirical Methods for Natural Language Processing: Industry Track (EMNLP), 2024.

Prompt expansion for adaptive text-to-image generation

Siddhartha Datta, Alexander Ku, Deepak Ramachandran, Peter Anderson

In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL), 2024

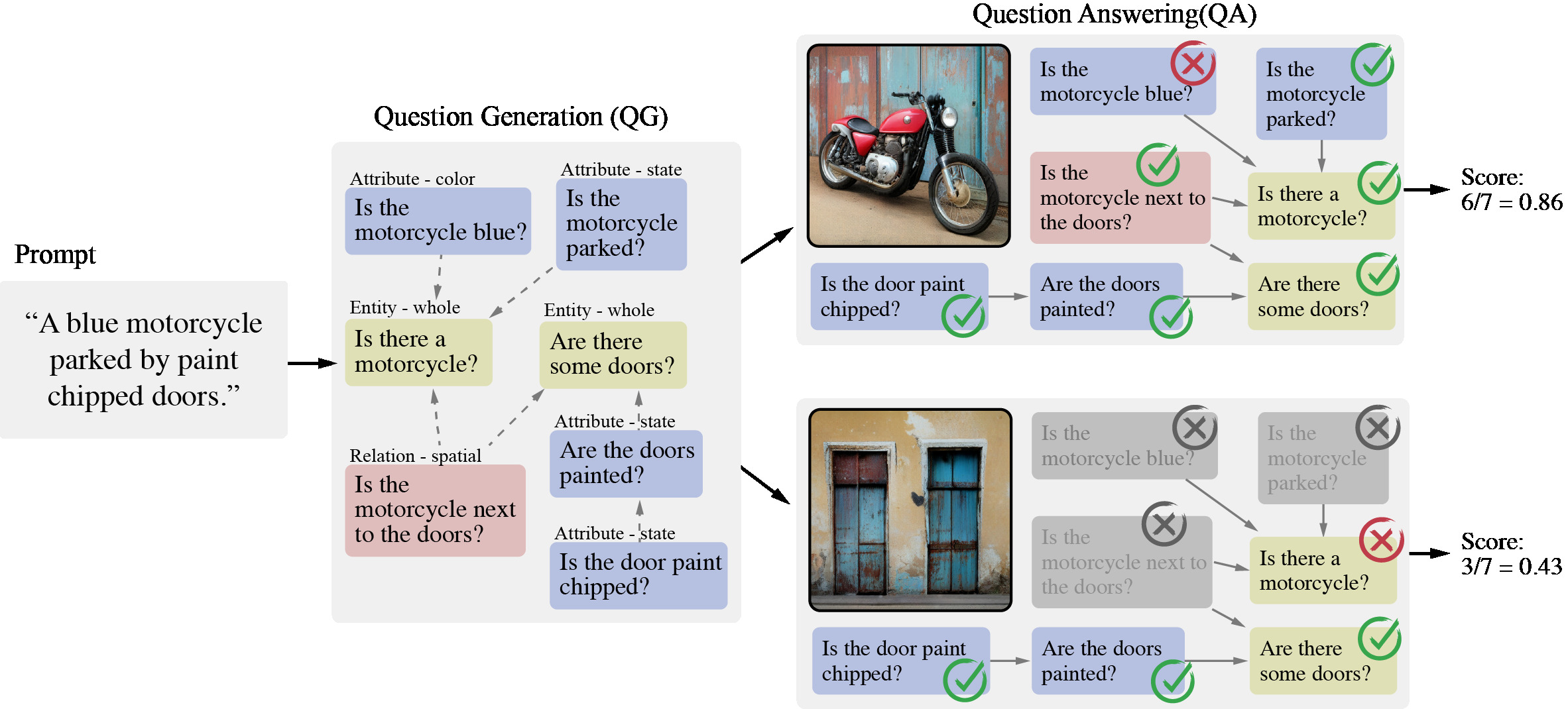

Davidsonian Scene Graph: Improving Reliability in Fine-grained Evaluation for Text-Image Generation

Jaemin Cho, Yushi Hu, Roopal Garg, Peter Anderson, Ranjay Krishna, Jason Baldridge, Mohit Bansal, Jordi Pont-Tuset, Su Wang

In International Conference on Learning Representations (ICLR), 2024

Imagen Editor and EditBench: Advancing and Evaluating Text-Guided Image Inpainting

Su Wang*, Chitwan Saharia*, Ceslee Montgomery*, Jordi Pont-Tuset, Shai Noy, Stefano Pellegrini, Yasumasa Onoe, Sarah Laszlo, David J. Fleet, Radu Soricut, Jason Baldridge, Mohammad Norouzi†, Peter Anderson†, William Chan†

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023. Highlight Presentation [Top 2.5%]

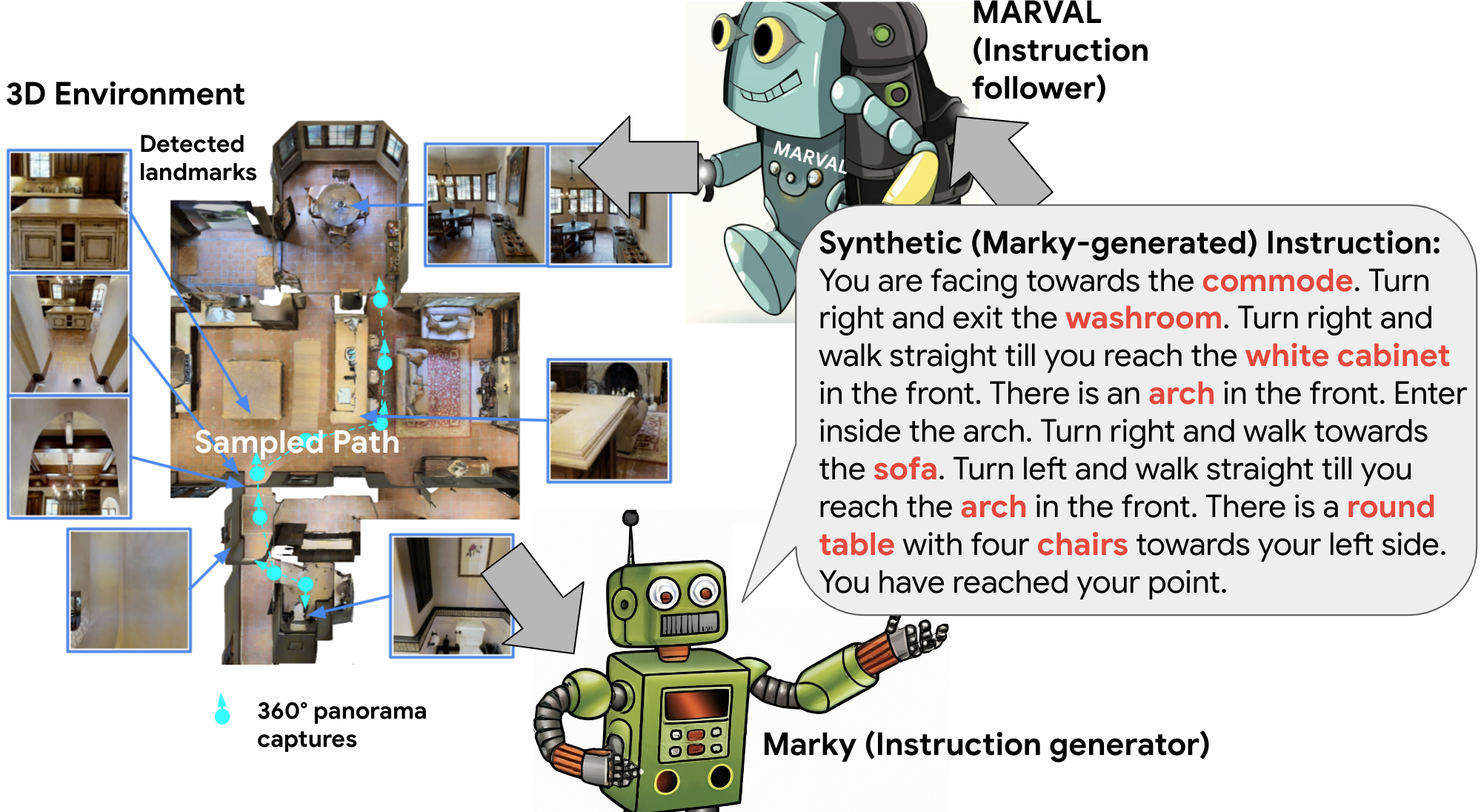

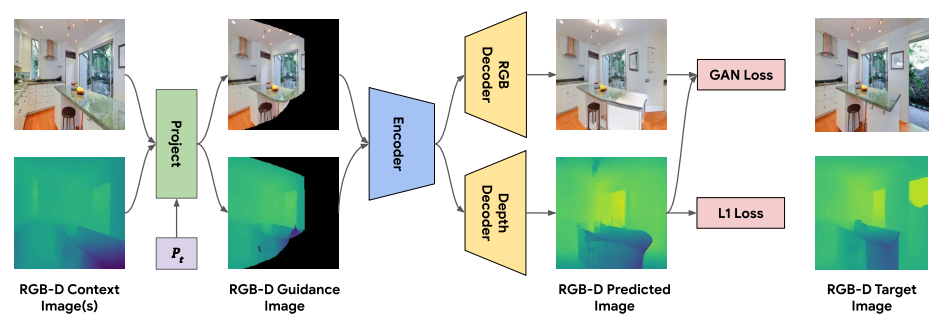

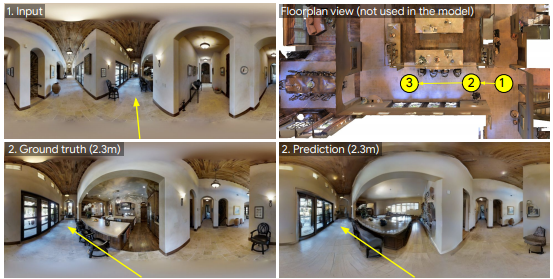

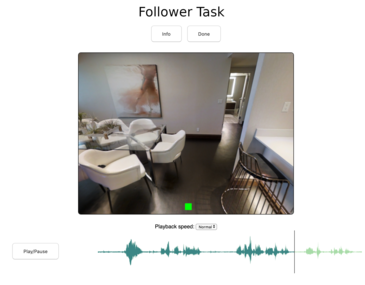

A New Path: Scaling Vision-and-Language Navigation with Synthetic Instructions and Imitation Learning

Aishwarya Kamath*, Peter Anderson*, Su Wang, Jing Yu Koh, Alexander Ku, Austin Waters, Yinfei Yang, Jason Baldridge, Zarana Parekh

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

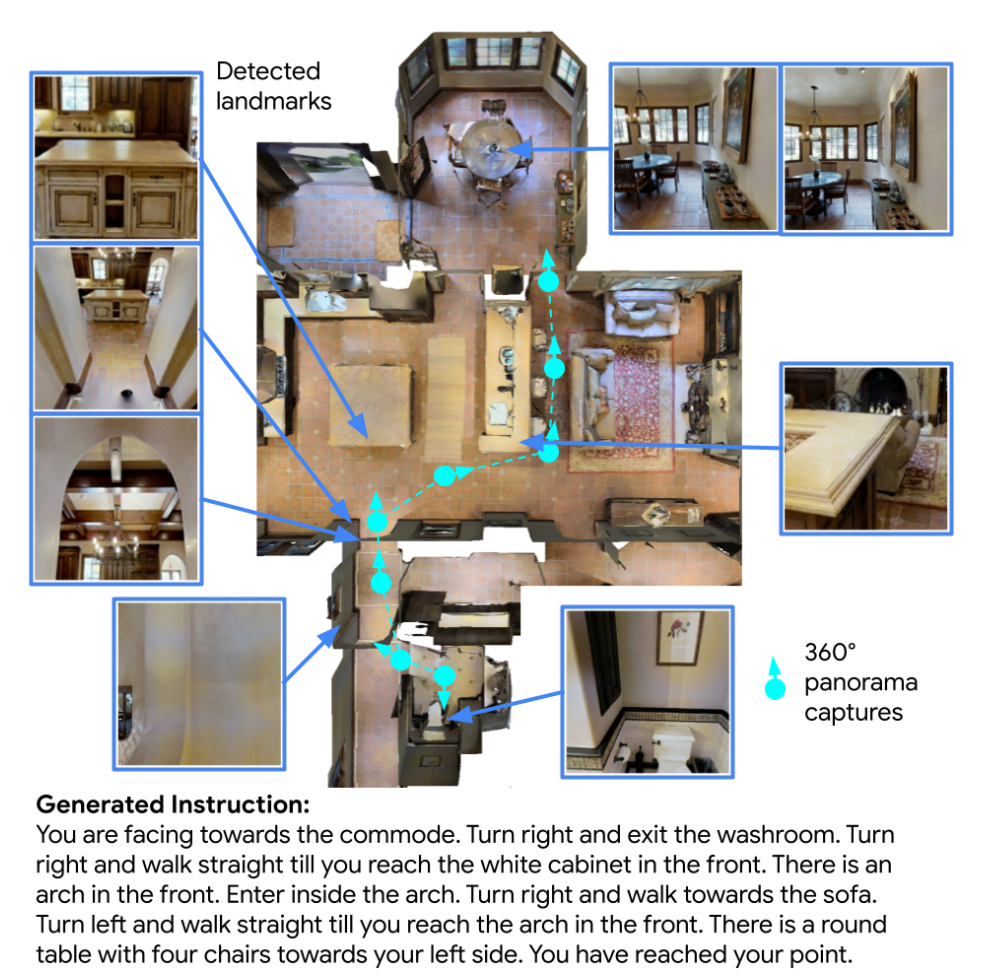

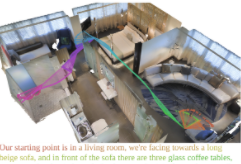

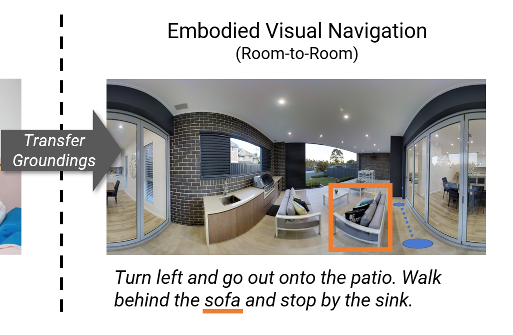

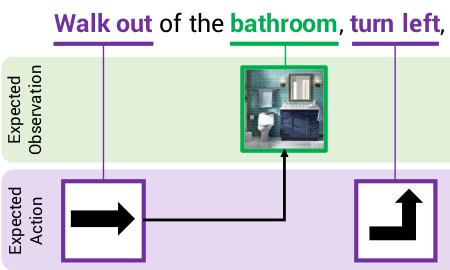

Less is More: Generating Grounded Navigation Instructions from Landmarks

Su Wang, Ceslee Montgomery, Jordi Orbay, Vighnesh Birodkar, Aleksandra Faust, Izzeddin Gur, Natasha Jaques, Austin Waters, Jason Baldridge, Peter Anderson

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

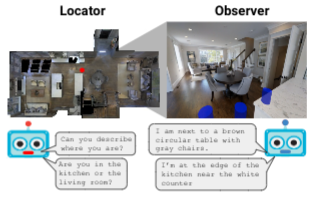

Room-Across-Room: Multilingual Vision-and-Language Navigation with Dense Spatiotemporal Grounding

Alex Ku*, Peter Anderson*, Roma Patel, Eugene Ie, Jason Baldridge

In Conference on Empirical Methods for Natural Language Processing (EMNLP), 2020.

REVERIE: Remote Embodied Visual Referring Expression in Real Indoor Environments

Yuankai Qi, Qi Wu, Peter Anderson, Xin Wang, William Yang Wang, Chunhua Shen, Anton van den Hengel

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020. Oral Presentation [Top 5.7%]

Visual Landmark Selection for Generating Grounded and Interpretable Navigation Instructions

Sanyam Agarwal, Devi Parikh, Dhruv Batra, Peter Anderson, Stefan Lee

In CVPR Workshop on Deep Learning for Semantic Visual Navigation, 2019.

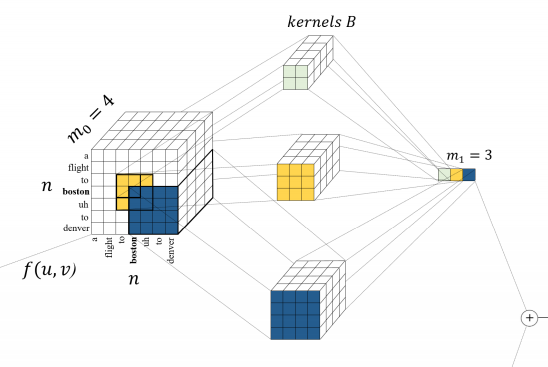

Disfluency Detection using Auto-Correlational Neural Networks

Paria Jamshid Lou, Peter Anderson, Mark Johnson

In Conference on Empirical Methods for Natural Language Processing (EMNLP), 2018.

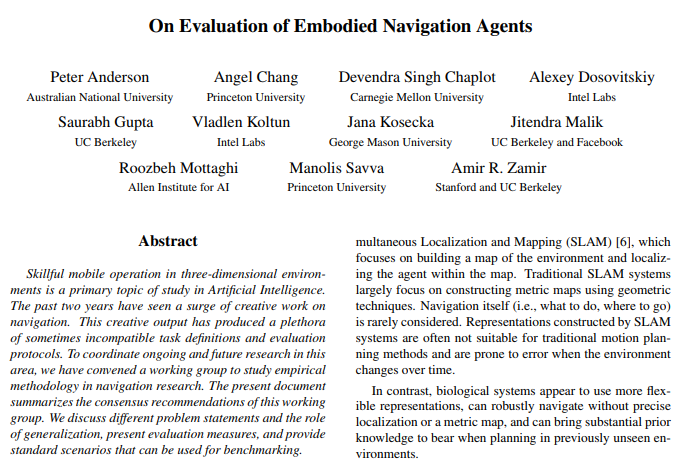

On Evaluation of Embodied Navigation Agents

Peter Anderson, Angel Chang, Devendra Singh Chaplot, Alexey Dosovitskiy, Saurabh Gupta, Vladlen Koltun, Jana Kosecka, Jitendra Malik, Roozbeh Mottaghi, Manolis Savva, Amir R. Zamir

arXiv preprint 1807.06757, 2018.

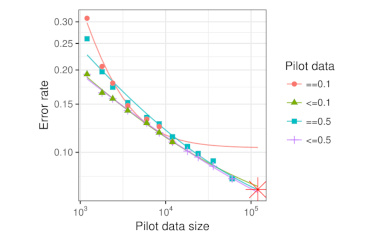

Predicting accuracy on large datasets from smaller pilot data

Mark Johnson, Peter Anderson, Mark Dras, Mark Steedman

In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL), 2018. Oral Presentation [Top 4.6%]

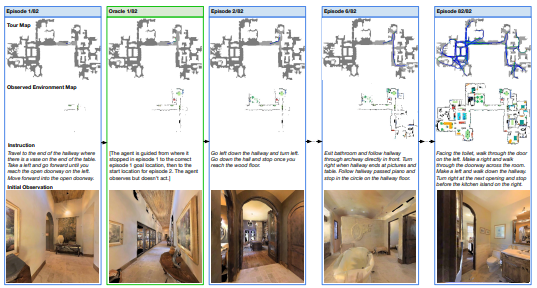

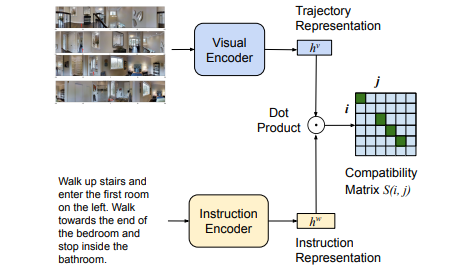

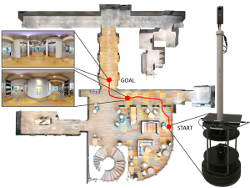

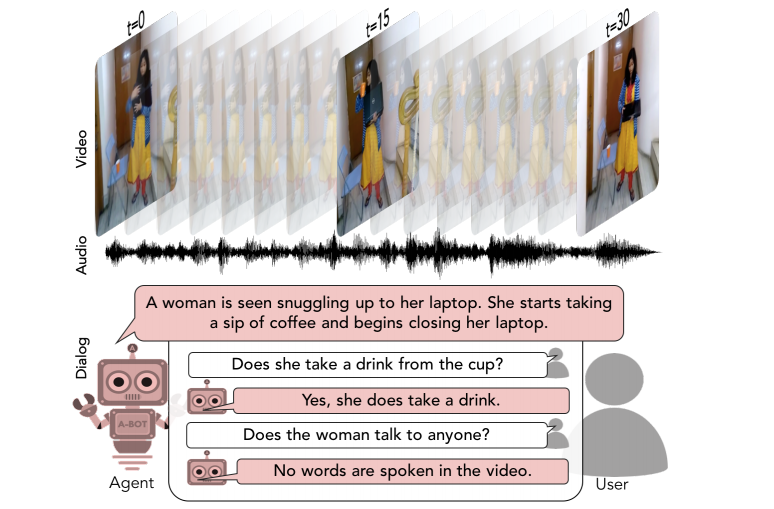

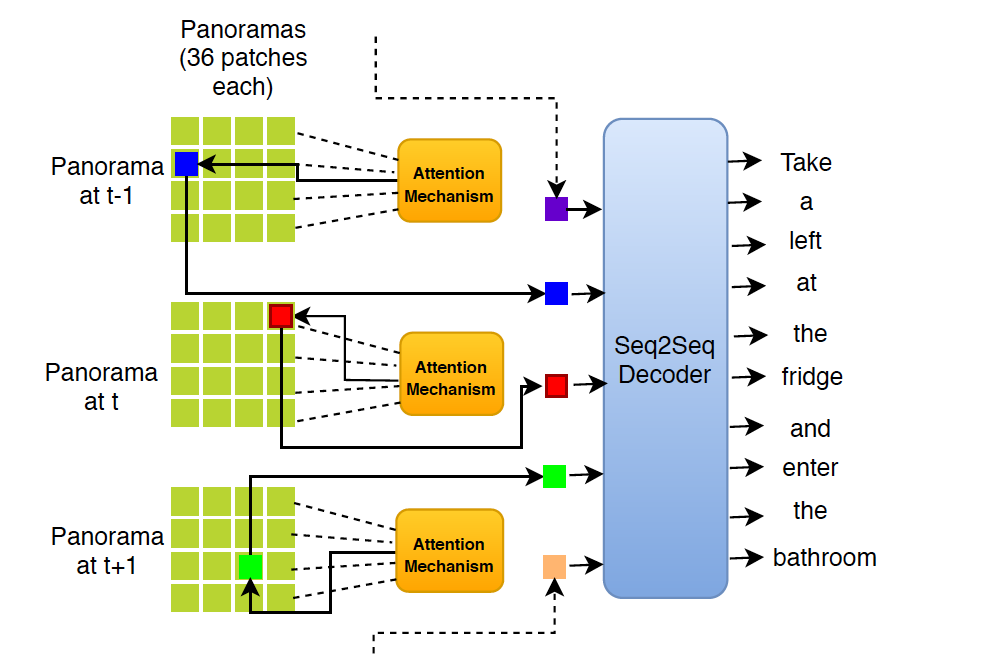

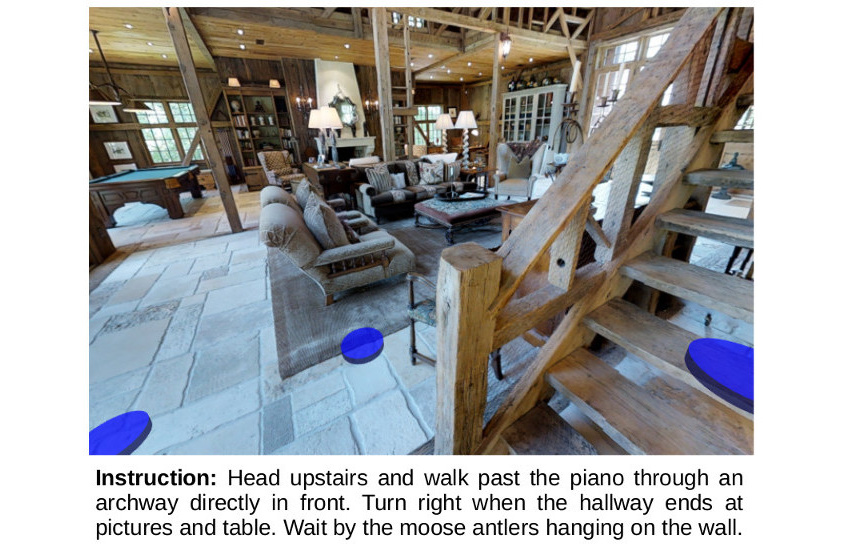

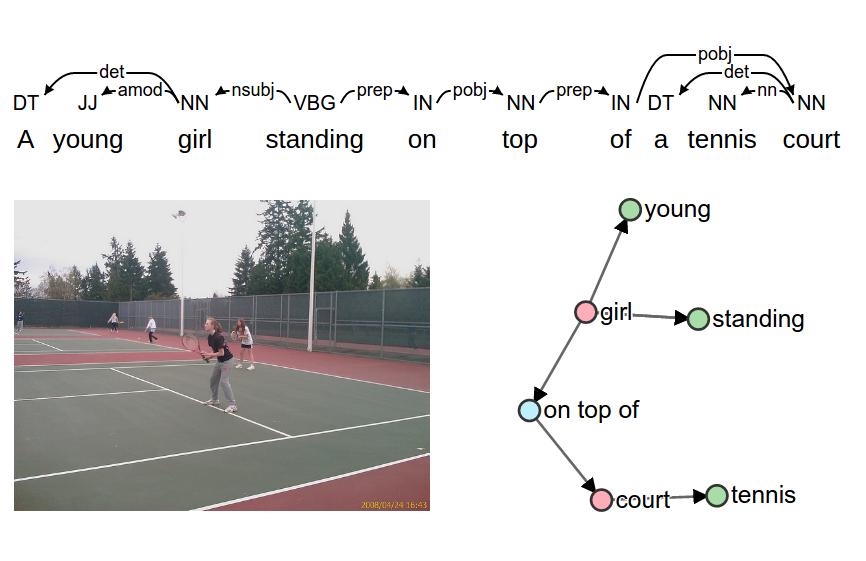

Vision-and-Language Navigation: Interpreting visually-grounded navigation instructions in real environments

Peter Anderson, Qi Wu, Damien Teney, Jake Bruce, Mark Johnson, Niko Sünderhauf, Ian Reid, Stephen Gould, Anton van den Hengel

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018. Spotlight Presentation [Top 8.9%]

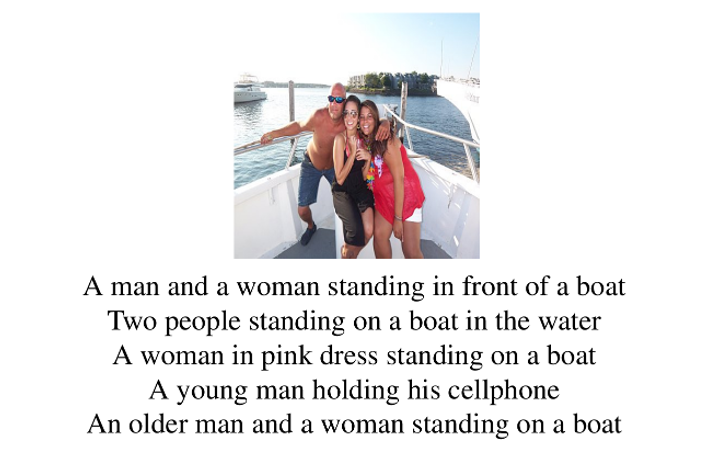

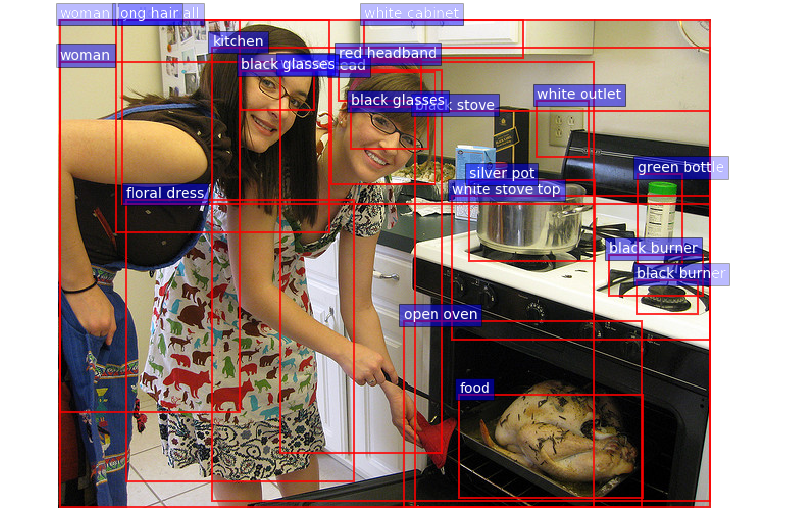

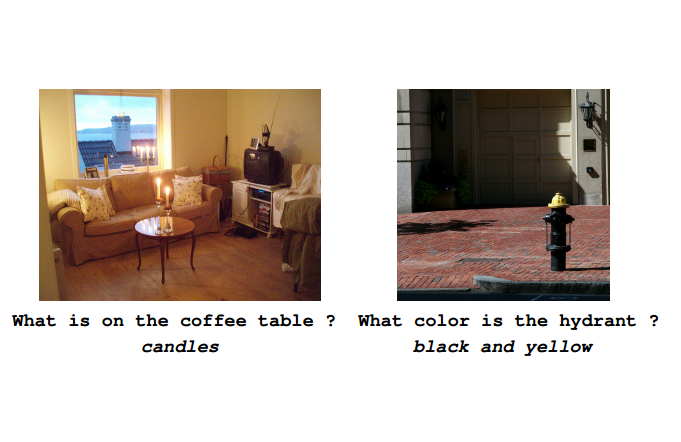

Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering

Peter Anderson, Xiaodong He, Chris Buehler, Damien Teney, Mark Johnson, Stephen Gould, Lei Zhang

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018. Oral Presentation [Top 2.1%]

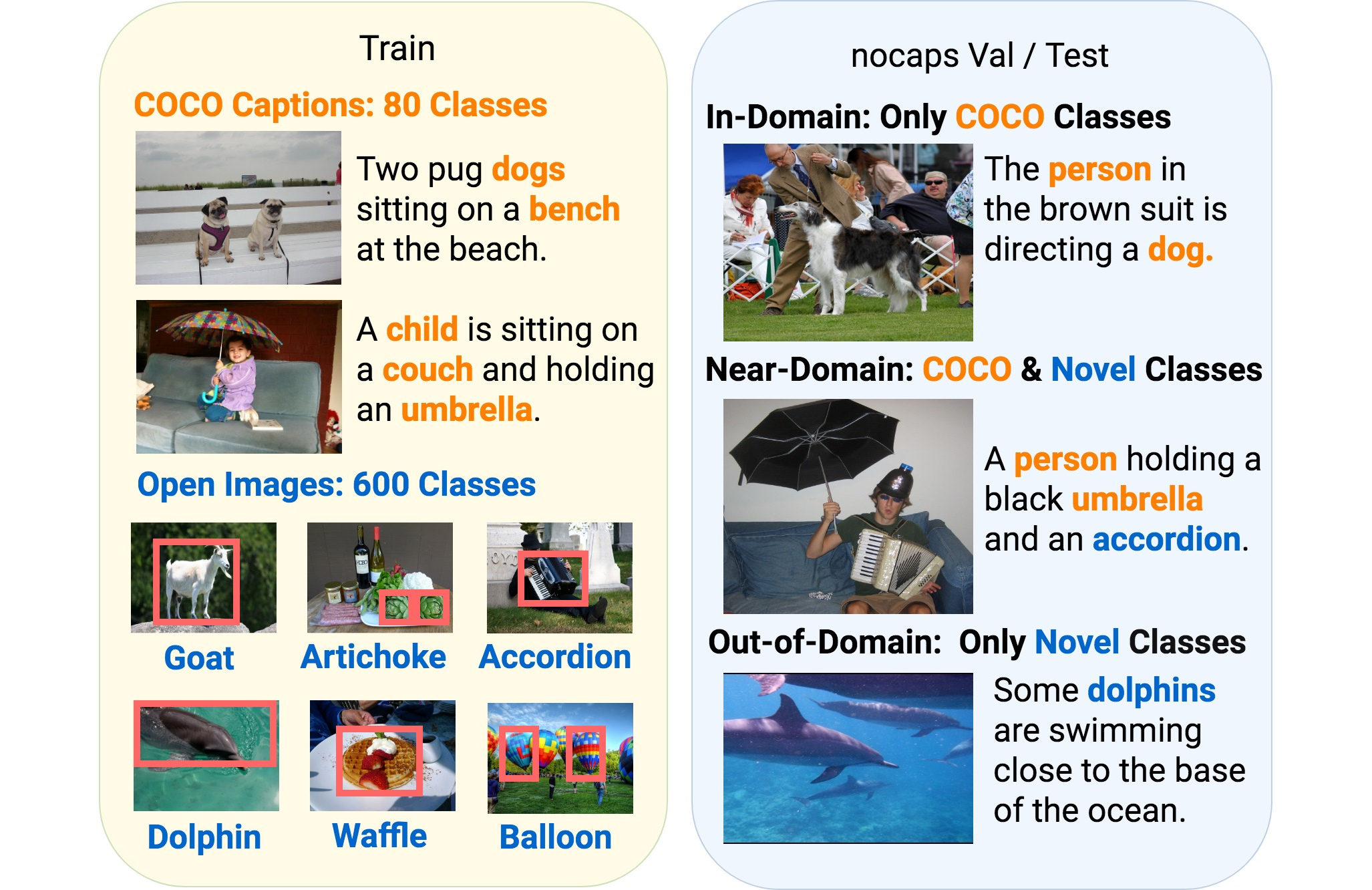

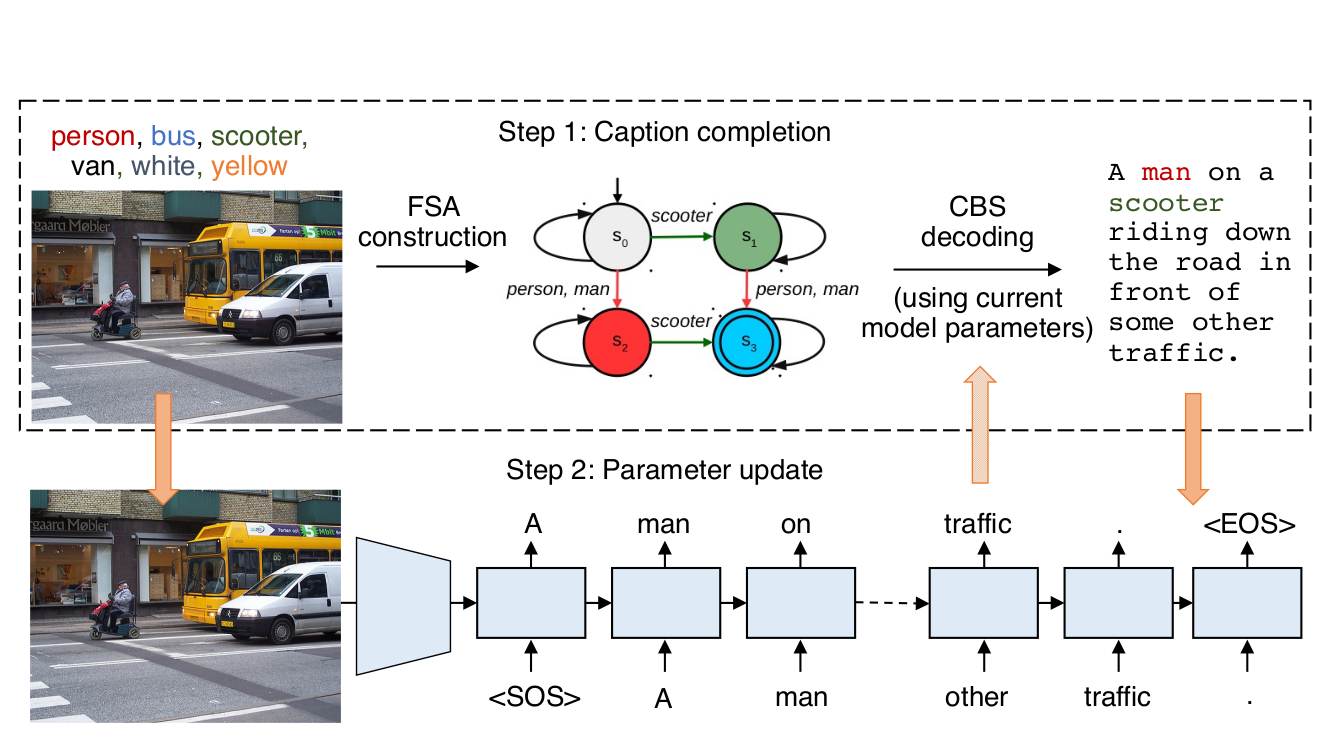

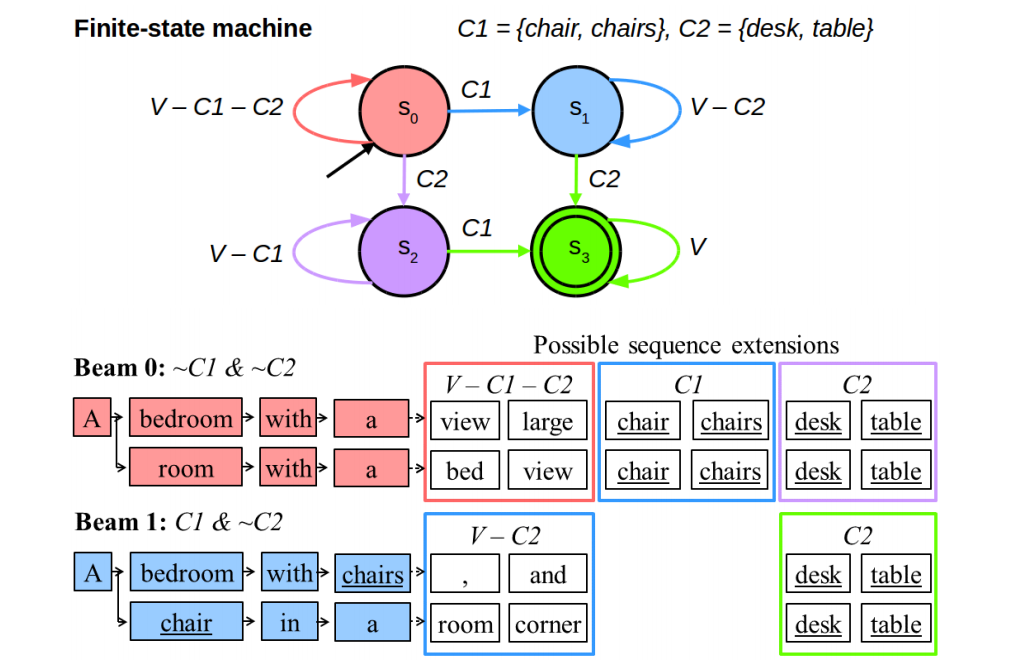

Guided Open Vocabulary Image Captioning with Constrained Beam Search

Peter Anderson, Basura Fernando, Mark Johnson and Stephen Gould

In Conference on Empirical Methods for Natural Language Processing (EMNLP), 2017.

PhD Thesis

Undergrad

An ICP Inspired Inverse Sensor Model with Unknown Data Association

Peter Anderson, Youssef Hunter and Bernhard Hengst

In IEEE International Conference on Robotics and Automation (ICRA), 2013.

Fast Monocular Visual Compass for a Computationally Limited Robot

Peter Anderson and Bernhard Hengst

In Proceedings of the RoboCup International Symposium (RoboCup), 2013. Oral Presentation

Robocup Standard Platform League - rUNSWift 2012 Innovations

Sean Harris, Peter Anderson, Belinda Teh, Youssef Hunter, Roger Liu, Bernhard Hengst, Ritwik Roy, Sam Li, Carl Chatfield

In Australasian Conference on Robotics and Automation (ACRA), 2012.

Robot Localisation Using Natural Landmarks

Peter Anderson, Yongki Yusmanthia, Bernhard Hengst and Arcot Sowmya

In Proceedings of the RoboCup International Symposium (RoboCup), 2012. Oral Presentation, Best Paper Finalist

Selected Talks

Massive Datasets for Language-Guided Navigation Agents and Where to Find Them. CVPR 2021 Embodied AI workshop invited talk.

Vision-and-Language Navigation: Interpreting visually-grounded navigation instructions in real environments. CVPR 2018 spotlight oral.

Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering. CVPR 2018 oral presentation.

Vision and language: attention, navigation, and making it work ‘in the wild’. Presented at the VQA Challenge and Visual Dialog Workshop at CVPR 2018.

Bio

I am an AI Researcher specializing in multimodal machine learning. Most of my research has been at the intersection of Natural Language Processing and Computer Vision, developing large-scale generative models that produce language, images, and/or actions. During my career I have moved between the tech (Google, Microsoft) and finance industries (Balyasny, Credit Suisse, Goldman Sachs). My current role developing AI models for investing combines both these aspects of my career. I completed my PhD in Computer Science at Australian National University in 2018 where I was advised by Stephen Gould. Previously I was a sell-side securities analyst with Credit Suisse in Sydney. I have the (fairly rare) distinction of winning two university medals, in Finance (from the University of Sydney) and Computer Engineering (from the University of New South Wales).

Head of Research, Applied AI at Balyasny Asset Management

Senior Research Scientist, Google

Research Scientist, Georgia Tech

PhD (Computer Science), Australian National University

Engineer, Sabre Autonomous Solutions

Flounder, FrameFish

BEng (Computer), University of NSW

Securities Analyst, Credit Suisse

BComm (Finance & Economics), University of Sydney

Other Projects

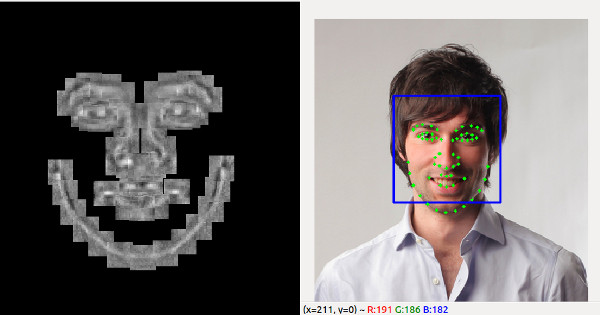

FrameFish

FrameFish was an eyewear virtual try-on system I developed for ecommerce websites. At the time it was much faster than competing systems, taking around 1 second to generate a virtual try-on image of a person wearing a selected pair of glasses or sunglasses (versus ~10 seconds for other web-based systems in 2013). FrameFish received an Innovate NSW grant and was featured on Sky Business News and in the UNSW Young Entrepreneurs series.